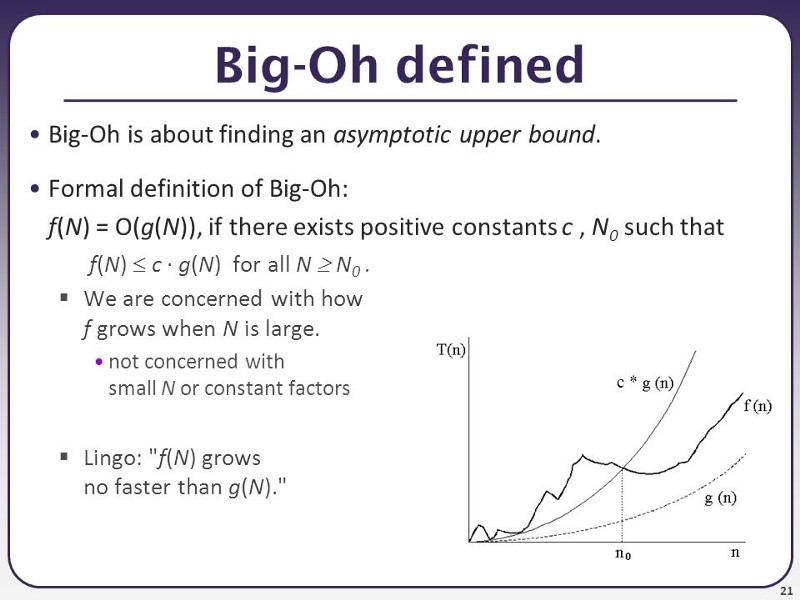

Here is a picture, that may help a little bit. The n is input size, and f(n) is how long does the algorithm runs (i.e how many instructions) it takes to calculate it for input for size n, i.e for finding smallest element in an array, n would be the number of elements in the array. g(n) is then the function you have in O, so if you have O(n^2) algorithm, the g(n) = n^2

Basically, you are looking for how quickly it grows for extreme values of N, while also disregarding constants. The graph representation probably isn’t too useful for figuring the O value, but it can help a little bit with understanding it - you want to find a O function where from one point onward (n0), the f(n) is under the O function all the way into infinity.

It has been a while since I have to deal with problem complexities in college, is there even class of problems that would require something like this, or is there a proven upper limit/can this be simplified? I don’t think I’ve ever seen O(n!^k) class of problems.

Hmm, iirc non-deterministic turing machines should be able to solve most problems, but I’m not sure we ever talked about problems that are not NP. Are there such problems? And how is the problem class even called?

Oh, right, you also have EXP and NEXP. But that’s the highest class on wiki, and I can’t find if it’s proven that it’s enough for all problems. Is there a FACT and NFACT class?