Aussie living in the San Francisco Bay Area.

Coding since 1998.

.NET Foundation member. C# fan

https://d.sb/

Mastodon: @dan@d.sb

- 0 Posts

- 687 Comments

User agents are essentially deprecated and are going to become less and less useful over time. The replacement is either client hints or feature detection, depending on what you’re using it for.

Most developers just write their own feature checks (a lot of detections are just a single line of code) or use a library that polyfills the feature if it’s missing.

The person you’re replying to is right, though. Modernizr popularized this approach. It predates npm, and npm still isn’t their main distribution method, so the npm download numbers don’t mean anything.

That’s exactly what you’re supposed to do with the modern web, via feature detection and client hints.

The user agent in Chrome (and I think Firefox too) is “frozen” now, meaning it no longer receives any major updates.

That really depends on the company. At big tech companies, it’s common for the levels and salary bands to be the same for both generalists (or full stack or whatever you want to call them) and specialists.

It also changes depending on market conditions. For example, frontend engineers used to be in higher demand than backend and full-stack.

Not sure how that’s relevant, but some states do have an equivalent to GDPR. California has CCPA for example.

e.g. outlook replaces links in mails so they can scan the site first. Also some virusscanners offer nail protection, checking the site that’s linked to first, before allowing the mail to end up in the user’s mail client.

Proofpoint does this too, but AFAIK they all just change the link rather than go to it. The link is checked when the user actually clicks on it. Makes sense to do it on-demand because the contents of the link can change between when the email is received and when the user actually clicks it.

(DSGVO is the German version of GDPR)

This field needs to be checked everywhere the account is used.

Usually something like this would be enforced once in a centralized location (in the data layer / domain model), rather than at every call site.

for the automatic removal after x amount of days

This gets tricky because in many jurisdictions, you need to ensure that you don’t just delete the user, but also any data associated with the user (data they created, data collected about them, data provided by third-parties, etc). The fan-out logic can get pretty complex :)

There’s a lot of logos with hidden stuff like that.

Amazon’s logo has an arrow going from A to Z, implying they sell everything “from A to Z”

The Tostitos logo has two people holding chips (the Ts) and a bowl of salsa (the dot on the I):

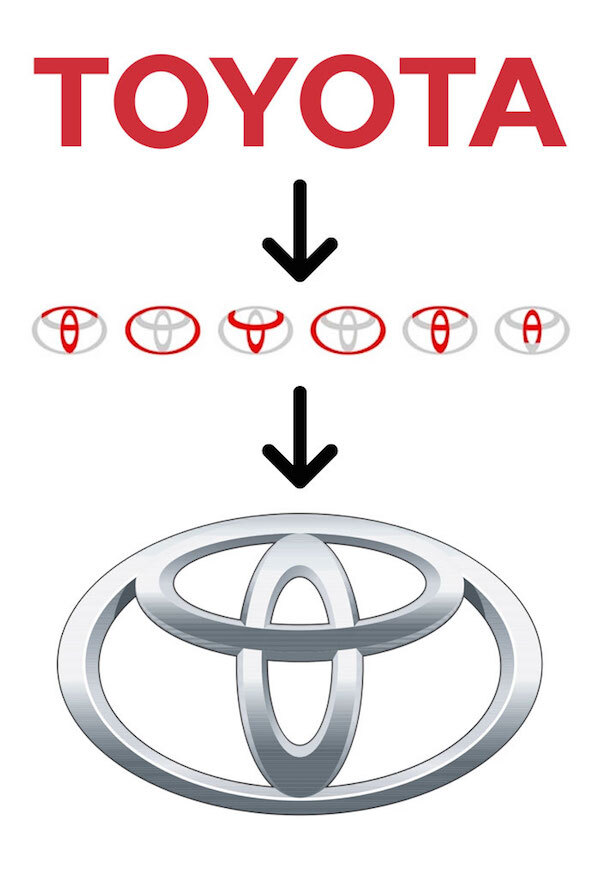

Toyota’s logo has every letter of the company name in it:

The LG logo has the letters L and G in it:

programmers need a self inflicted rule of it being less than 500 lines of code means you need to write it instead of using a pre written package/library.

That’s what I do, but then I end up with similar utils across multiple projects (eg some of these array, map, and set utils: https://github.com/Daniel15/dnstools/tree/master/src/DnsTools.Web/ClientApp/src/utils) and wonder if I should create a library.

Then I end up doing that (https://github.com/Daniel15/jsframework is my most ‘recent’ one, now very outdated) but eventually the library gets outdated and you end up deleting most of it and starting again. (edit: practically this entire library is obsolete how)

It’s the circle of life.

someone at Google or Mozilla hasn’t decided to write a JavaScript Standard Library.

Core APIs (including data types like strings, collection types like Map, Set, and arrays), Browser, and DOM APIs are pretty good these days. Much better than they used to be, with more features and consistent behaviour across all major browsers. It’s uncommon to need browser-specific hacks for those any more, except sometimes in Safari which acts weird at times.

The main issue is server-side, and neither Google nor Mozilla have a big interest in server side JS. Google mostly uses Python and Java for their server-side code, and Mozilla mostly uses Rust.

Having said that, there’s definitely some improvements that could be made in client-side JS too.

Vanilla JS is pretty good on the client side, but leaves a lot to be desired on the server side in Node.js, even if you include the standard Node.js modules.

For example, there’s no built-in way to connect to a database of any sort, nor is there a way to easily create a basic HTTP REST API - the built in HTTP module is just raw HTTP, with no URL routing, no way to automatically JSON encode responses, no standardized error handling, no middleware layer, etc.

This means that practically every Node.js app imports third-party modules, and they vary wildly in quality. Some are fantastic, some are okay, and some are absolutely horrible yet somehow get millions of downloads per week.

7·4 months ago

7·4 months agoI’m not sure, sorry. The source control team at work set it up a long time ago. I don’t know how it works - I’m just a user of it.

The linter probably just runs

git diff | grep @nocommitor similar.

3·4 months ago

3·4 months agoWould a git hook block you from committing it locally, or would it just run on the server side?

I’m not sure how our one at work is implemented, but we can actually commit

@nocommitfiles in our local repo, and push them into the code review system. We just can’t merge any changes that contain it.It’s used for common workflows like creating new database entities. During development, the ORM system creates a dev database on a test DB cluster and automatically points the code for the new table to it, with a

@nocommitcomment above it. When the code is approved, the new schema is pushed to prod and the code is updated to point to the real DB.Also, the codebase is way too large for something like ripgrep to search the whole codebase in a reasonable time, which is why it only searches the commit diffs themselves.

10·4 months ago

10·4 months agoThis will inevitable lead to “works on my machine” scenarios

Isn’t this why Docker exists? It’s “works on my machine”-as-a-service.

52·4 months ago

52·4 months agoAt my workplace, we use the string

@nocommitto designate code that shouldn’t be checked in. Usually in a comment:// @nocommit temporary for testing apiKey = 'blah'; // apiKey = getKeyFromKeychain();but it can be anywhere in the file.

There’s a lint rule that looks for

@nocommitin all modified files. It shows a lint error in dev and in our code review / build system, and commits that contain@nocommitanywhere are completely blocked from being merged.(the code in the lint rule does something like

"@no"+"commit"to avoid triggering itself)

PhD is literally “Philosophy Doctor” or “Doctor of Philosophy”

I’ve been trying to get it to say that other stores like B&H are better than Amazon (for the lulz) but it keeps saying “I don’t have an answer for that” :(

It probably accepts other key types and it’s just the UI that’s outdated. I doubt they’re using an SSH implementation other than Dropbear or OpenSSH, and both support ed25519.